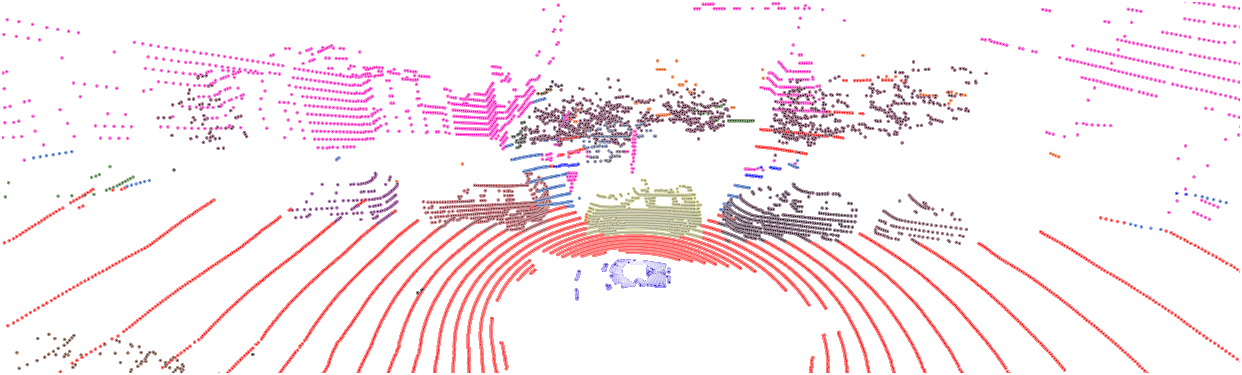

Autonomous vehicles are required to operate in challenging urban environments that consist of a wide variety of agents and objects, making comprehensive perception a critical task for robust and safe navigation. Typically, perception tasks are focused on independently reasoning about the semantics of the environment and recognition of object instances. The task of panoptic segmentation is a scene understanding problem that aims to provide a holistic solution by unifiying semanitc and instance segmentation tasks. Panoptic segmentation simultaneously segments the scene into ‘stuff’ classes that comprise of background objects or amorphous regions such as road, vegetation, and buildings, as well as ‘thing’ classes that represent distinct foreground objects such as cars, cyclists, and pedestrians.

EfficientLPS Demo

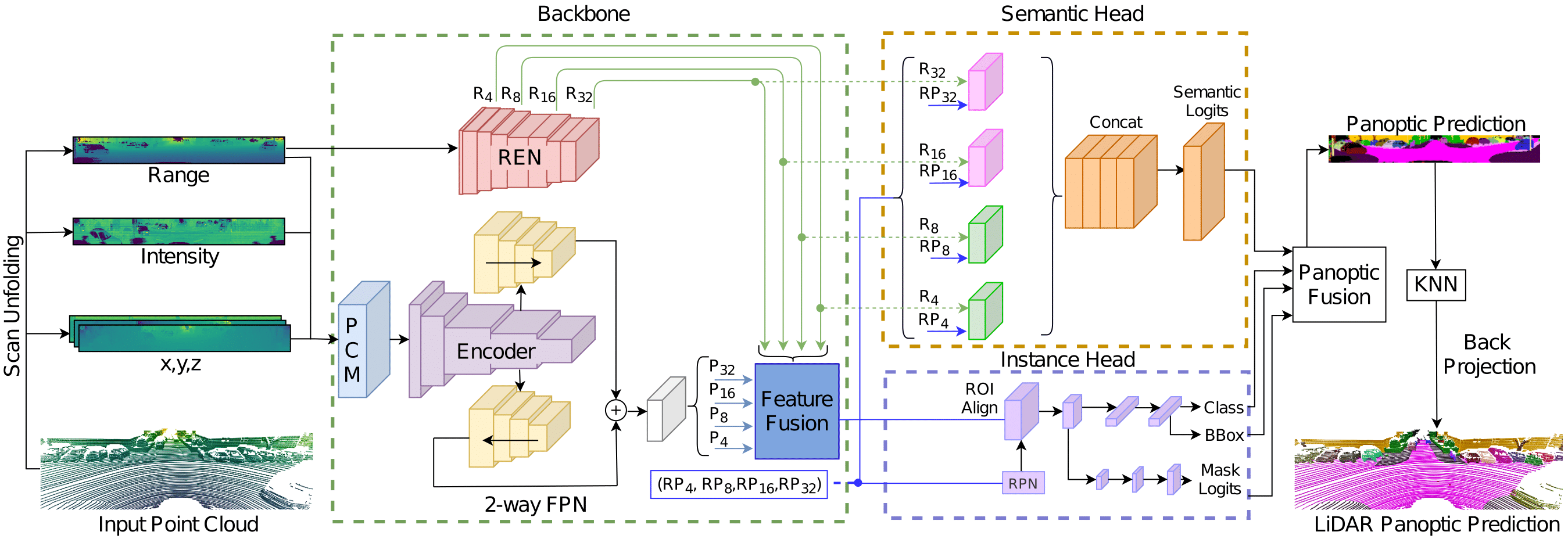

Top-Down Deep Convolutional Neural Networks approch for LiDAR Panoptic Segmentation

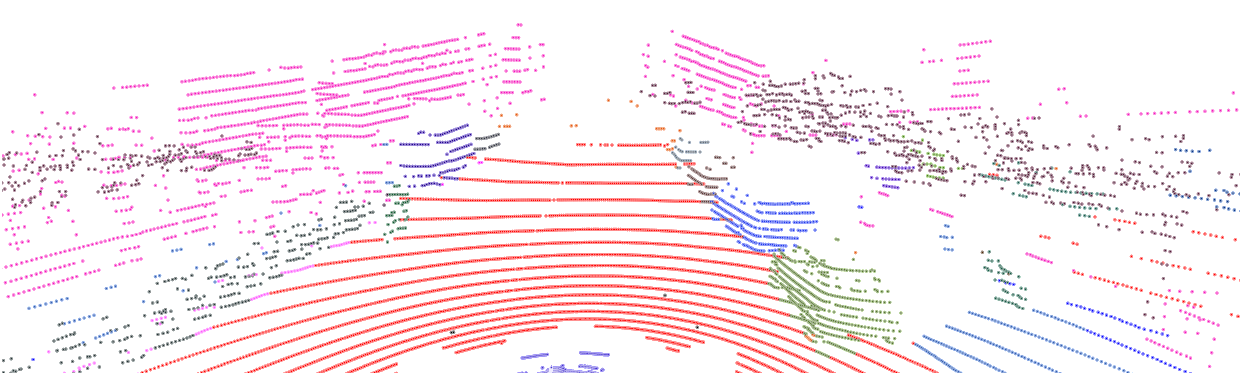

This demo shows the LiDAR panoptic segmentation performance of our EfficeintLPS model trained on SemanticKITTI and NuScenes datasets. EfficientLPS is currently ranked #1 for LiDAR panoptic segmentation on the SemanticKITTI leaderboard. To learn more about LiDAR panoptic segmentation and the approach employed, please see the Technical Approach. View the demo by selecting a dataset to load from the drop down box below and click on a LiDAR scan in the carosel to see live results.

Please Select a Model:

Selected Dataset:

SemanticKITTI